I’ve created this document in order to have a clear guide to replace boot disks in a ZFS pool for proxmox, basically because the one on their documentation was not completely clear for me.

Source documentation: https://pve.proxmox.com/wiki/ZFS_on_Linux

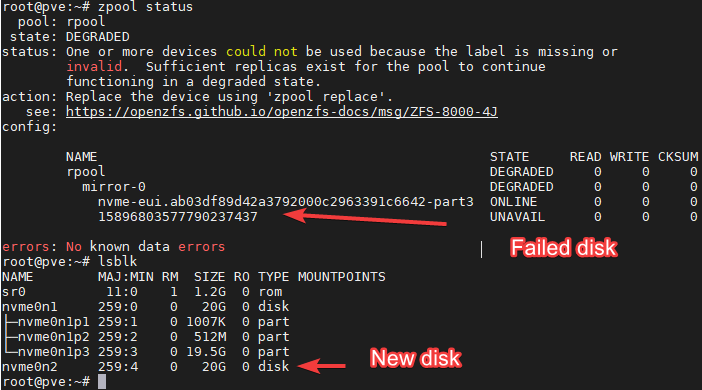

In my case I was dealing with a Raid1 ZFS pool, both with bootable drives.

I wasn’t aware these drives had 3 partitions, which I had to replicate to the new drive in order to perform the proper replacement.

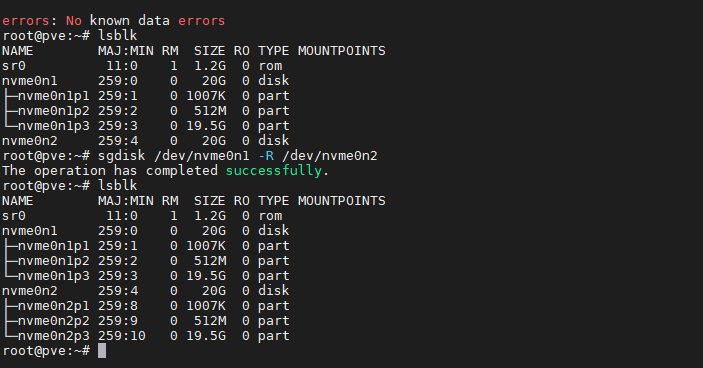

We replicate these partitions with the following commands:

sgdisk <healthy bootable device> -R <new device> - (use /dev/diskname)

sgdisk -G <new device> - (use /dev/diskname)

The the second command will make sure the new partitions that have been copied from the remaining surviving drive, have unique GUIDs, it’s a bad idea to have disks with cloned GUIDs.

In the example above we see that Nvme0n1 is the remaining disk in the array, which is in good state.

Nvme0n2 is the new one, the one we are going to used to replace the failed one.

Knowing this we run the following commands:

sgdisk /dev/nvme0n1 -R /dev/nvme0n2

sgdisk -G /dev/nvme0n2

The last command should output: The operation has completed successfully.

After, we should see the partitions replicated:

Now, we need to add the partition 3 to the ZFS pool array. Previously I made the mistake of adding the complete disk, which would destroy the partitions created and will not let you install the boot partition into the disk.

Avoid that mistake, what we need to add is the disk to the array, not the complete disk.

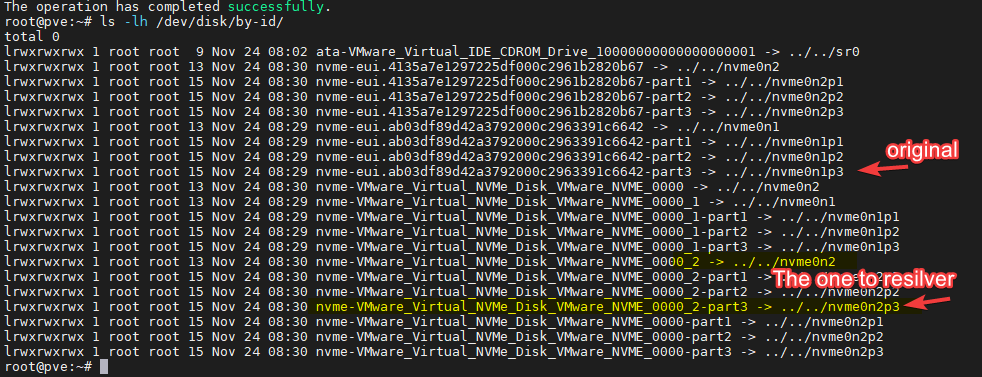

Lets move now to identify the partition ID we want to replicate:

ls -lh /dev/disk/by-id/

We know the new disk is the nvme0n2, and we know the partition is nvme0n2p3, so the ID we’ll use now its:

nvme-VMware_Virtual_NVMe_Disk_VMware_NVME_0000_2-part3

The command we need to now follow its:

# zpool replace -f <pool> <failed disk id> <new zfs partition>

From the first image in the document, we know the failed partition has this ID: 15896803577790237437

The resulting command should be:

zpool replace -f rpool 15896803577790237437 nvme-VMware_Virtual_NVMe_Disk_VMware_NVME_0000_2-part3

Do NOT do it like this:

zpool replace -f rpool 15896803577790237437 /dev/nvme0n2p3

The zpool replace command will start a resilvering process, which you should monitor until its 100% complete before moving forward.

We can monitor this process with the command:

watch zpool status -v

Once this process is completed, you can move on to install the boot files in the p2 partition for the drive

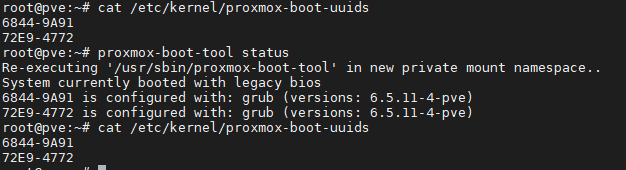

First we need to validate if we are using UEFI or GRUB with the following command:

proxmox-boot-tool status

You need to validate if the system says you are booting with legacy bios or UEFI.

# proxmox-boot-tool format <new disk's ESP>

In the example we are using, we know the boot partition should be /dev/nvme0n2p2, so following the example above the next command should be:

proxmox-boot-tool format /dev/nvme0n2p2

# proxmox-boot-tool init <new disk's ESP> [grub] (optional)

After formatting the partition, we proceed to install the boot files, if we are using grub the command should be:

proxmox-boot-tool init /dev/nvme0n2p2 [grub]

If we are using UEFI, the command should be:

proxmox-boot-tool init /dev/nvme0n2p2

Then we proceed to clean the previous boot entries that are no longer relevant with the following command:

proxmox-boot-tool clean

You can now proceed to validate if the boot partitions have been correctly installed with the following commands:

proxmox-boot-tool status

cat /etc/kernel/proxmox-boot-uuids

The output should look similar to this (Legacy Bios example)

Since this is a raid1 pool with 2 disk, we should only see two lines per output.

You should now be able to boot from both drives!